I am currently a third-year Master’s student in Applied Mathematics at Fudan University. My research focuses on generative machine learning, with particular emphasis on probabilistic distribution modeling, generative models and multimodal representation learning. At present, I am exploring recursive and looped architectures, such as diffusion language models, tiny recursive models, and looped transformers, for complex reasoning tasks. I plan to apply for PhD programs in Fall 2026 and am seeking potential advisors and collaborators with shared research interests. Feel free to reach out if you’d like to learn more about my work, chat, or explore potential collaborations. This is my google scholar.

🔥 News

- 2025.09: 🎉 GOOD is accepted by Neurips 2025. Got visa! See you in San Diego!

- 2025.09: 🎉 OrderMind is accepted by Neurips 2025. Congrats to Yuxiang!

- 2025.09: 🎉 Loong is accepted by Neurips 2025 workshop. Great work from the Camel-AI team!!

- 2025.06: 🎉 Dark-ISP is accepted by ICCV 2025. Congrats to Jiasheng! Hope to see you in Hawaii!

- 2025.05: Start my internship in shanghai AIlab with Jie Fu! Do you have Big AI Dream~

- 2025.04: Welcomed two adorable cats into my life: Fellow and Putao 🐱🍇

- 2025.01: 🎉 MVP is accepted by ICLR 2025. See you in Singapore!

- 2024.12: Start my internship with prof Chenyang Si and Ziwei Liu~

📝 Selected Publications

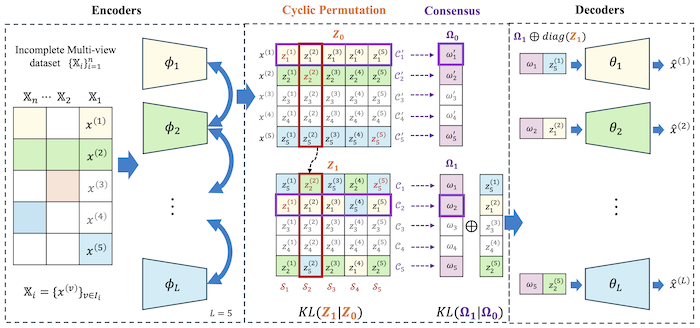

MVP: Deep Incomplete Multi-view Learning via Cyclic Permutation of VAEs

Xin Gao, Jian Pu

- MVP introduces a novel approach to incomplete multi-view representation learning by leveraging latent space correspondences in Variational Auto-Encoders, enabling the inference of missing views and enhancing the consistency of multi-view data even with irregularly missing information.

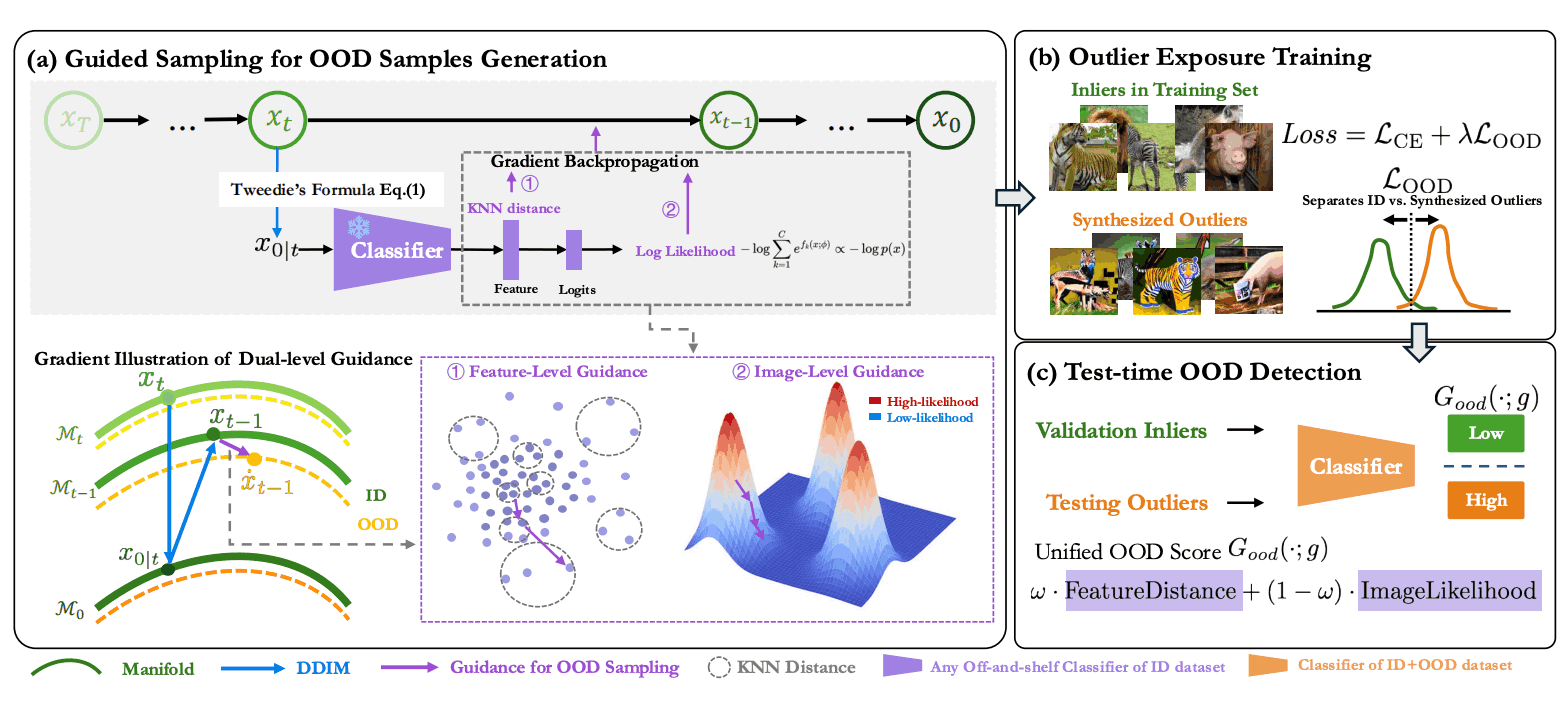

GOOD: Training-Free Guided Diffusion Sampling for Out-of-Distribution Detection

Xin Gao, Jiyao Liu, Guanghao Li, Yueming Lyu, Jianxiong Gao, Weichen Yu, Ningsheng Xu, Liang Wang, Caifeng Shan, Ziwei Liu, Chenyang Si

- GOOD is a training-free diffusion guidance framework that shapes a robust OOD/ID decision boundary. It steers sampling with two gradients—image-level toward low-density regions and feature-level toward sparse zones—to generate diverse, controllable OOD examples.

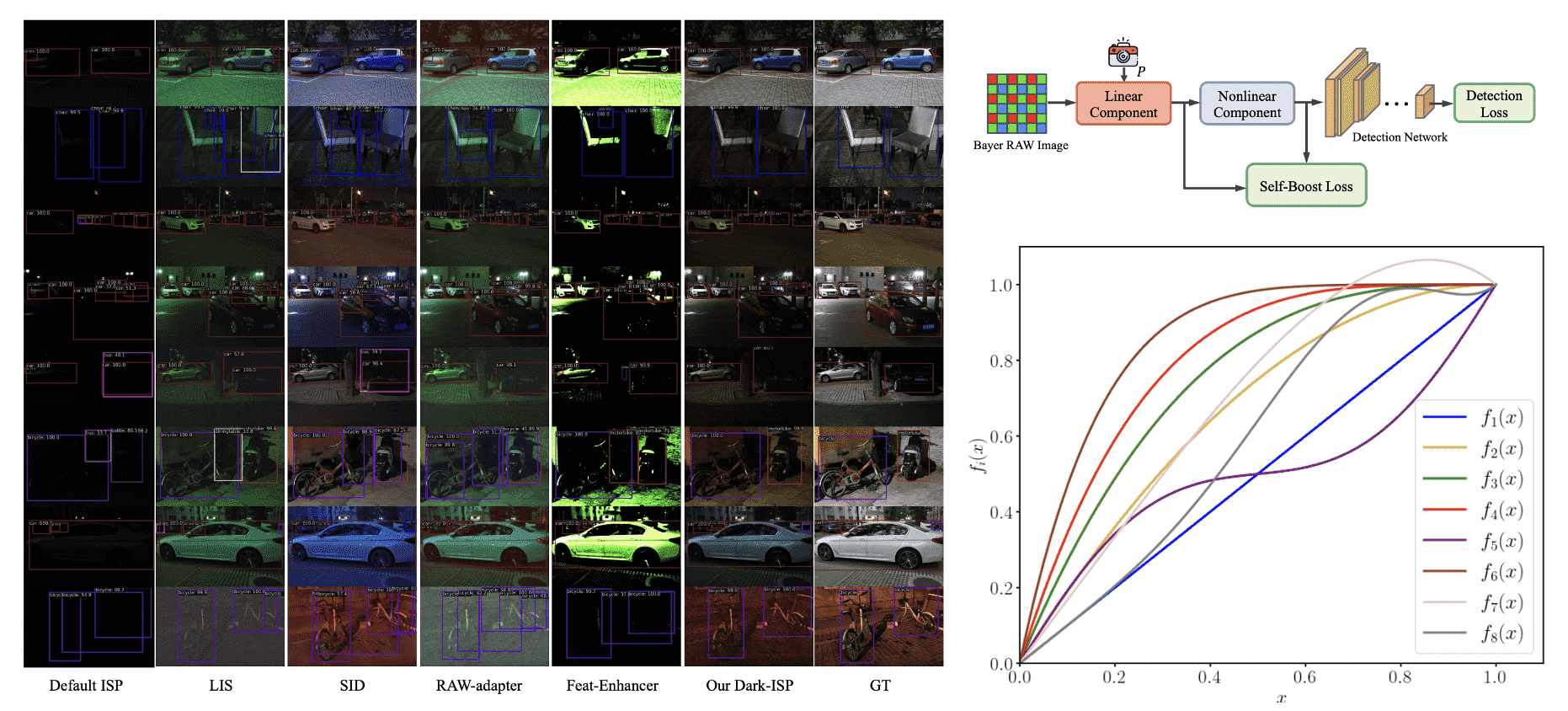

Dark-ISP: Enhancing RAW Image Processing for Low-Light Object Detection

Jiasheng Guo#, Xin Gao#, Yuxiang Yan, Guanghao Li, Jian Pu

- Dark-ISP is a lightweight, self-adaptive ISP plugin that enhances low-light object detection by processing Bayer RAW images. It breaks down traditional ISP pipelines into optimized linear and nonlinear sub-modules, using physics-informed priors for automatic RAW-to-RGB conversion.

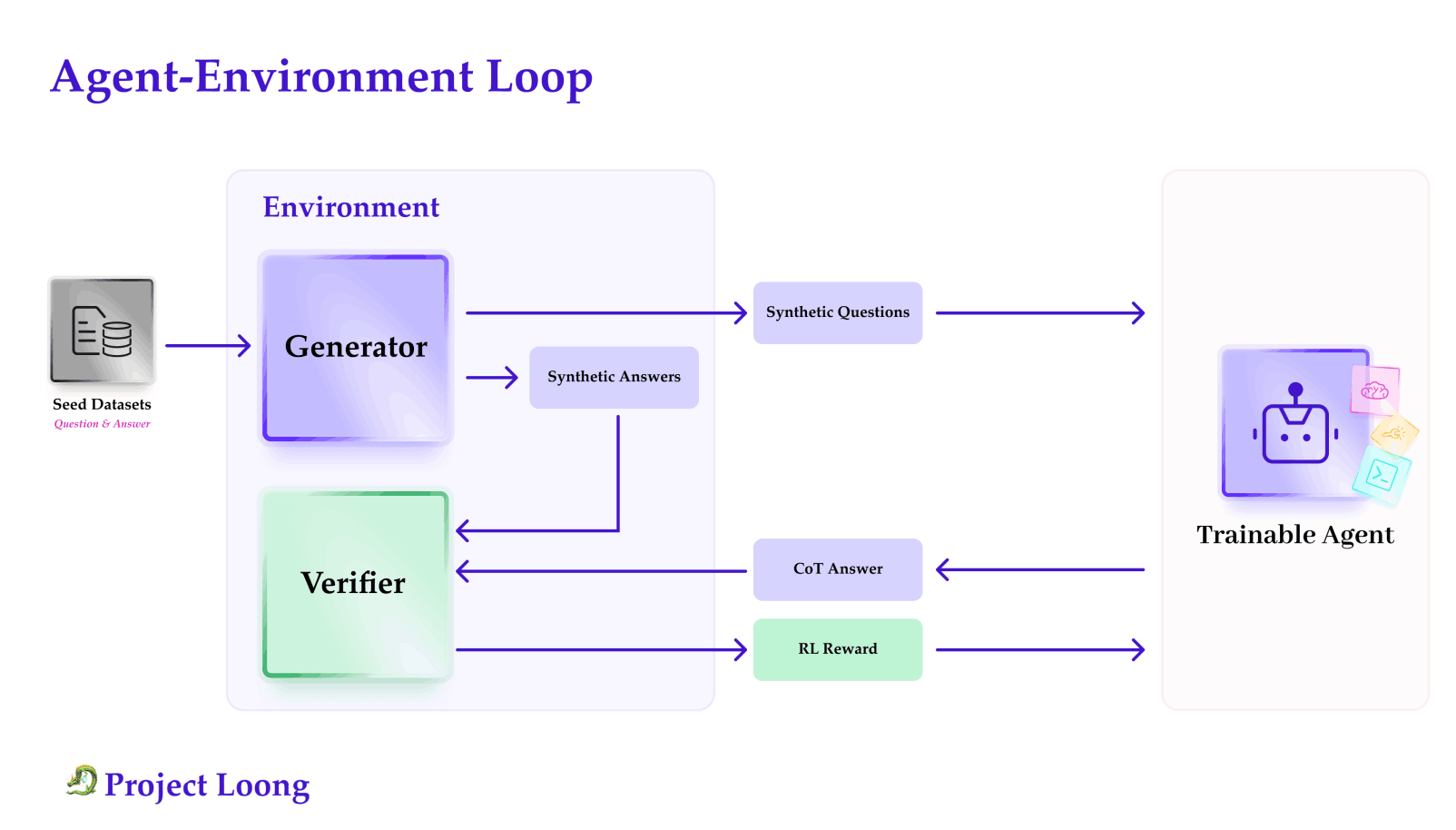

Loong: Synthesize Long Chain-of-Thoughts at Scale through Verifiers

Xingyue Huang, Rishabh, Gregor Franke, Ziyi Yang, Jiamu Bai, Weijie Bai, Jinhe Bi, Zifeng Ding, Yiqun Duan, Chengyu Fan, Wendong Fan, Xin Gao et al.

- Loong is an open-source framework for scalable, verifiable reasoning data. It pairs LoongBench (8,729 human-vetted, code-checkable examples across 12 domains) with LoongEnv, a modular generator forming an agent–environment loop for RL with verifiable rewards and broad-domain CoT training.

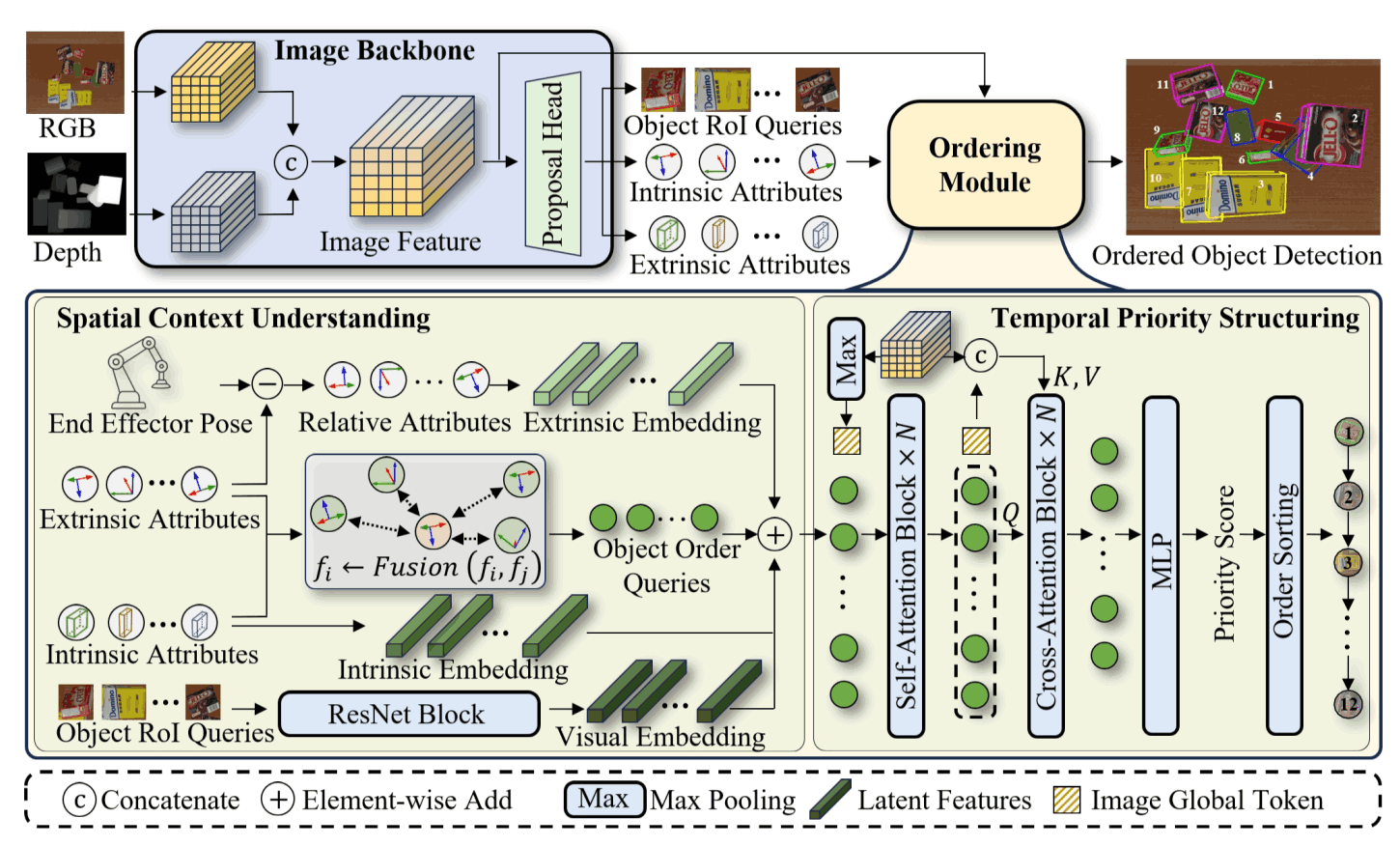

Learning Spatial-Aware Manipulation Ordering

Yuxiang Yan, Zhiyuan Zhou, Xin Gao, Guanghao Li, Shenglin Li, Jiaqi Chen, Qunyan Pu, Jian Pu

- This paper introduces OrderMind, a spatial-aware manipulation ordering framework that learns object priorities from local geometry via a kNN spatial graph and a lightweight temporal module, supervised by VLM-distilled spatial priors. It also presents the Manipulation Ordering Benchmark (163k+ samples) for cluttered scenes.

Why PhD?

And I’ve been thinking a lot recently - why PhD?

In fact, I was originally admitted to Fudan University as a direct PhD student after my undergraduate studies. However, at that time, the available research directions in my department were mostly focused on interdisciplinary areas between neuroscience and AI, which didn’t fully align with my core interests - I’ve always been more fascinated by the algorithmic and mathematical foundations of intelligence.

Coming from a pure mathematics background, I realized that I hadn’t yet received systematic training in scientific research, especially in fields related to AI and machine learning. I felt that before committing to a long and challenging PhD journey, I needed to go through a complete and rigorous master’s program - to learn how to ask meaningful questions, design experiments, and build research intuition.

Fortunately, during this process, I discovered the genuine joy of research. I found excitement in turning abstract ideas into working systems, in bridging theoretical insights with practical models, and in those quiet moments of struggling with an equation until it suddenly made sense. Research became more than a requirement - it became a way of thinking.

That’s why I now seek a PhD not as an academic credential, but as a natural continuation of this intellectual curiosity. I want to push the boundary between representation structure and generative intelligence, to explore how reasoning and understanding can emerge from learning systems, and to work with mentors and peers who share this deep fascination. To me, a PhD represents the freedom and responsibility to create something that didn’t exist before - and I’m ready for that challenge.

🎖 Honors and Awards

- 2023.09 Fudan University Zhicheng Freshman Second Prize Scholarship (Top 5%)

- 2023.06 Outstanding Graduate of Shanghai

- 2022.11 Second Prize in the Chinese Mathematics Competitions (Category A)

- 2021.12 National Scholarship, China

- 2021.09 National Second Prize in the China Undergraduate Mathematical Contest in Modeling

- 2020.12 Shanghai Scholarship

👨💼 Academic Service

- Conference Reviewer: Neurips 2025, ICCV 2025, ICLR 2026

📖 Educations

- 2023.09 - 2026.06 (now), Master of Applied Mathematics, Fudan University, Shanghai, China.

- 2019.09 - 2023.06, Bachelor of Mathematics, Donghua Univeristy, Shanghai, China.

💻 Internships

- 2025.05 - Now, Shanghai AIlab, Shanghai, China.

- 2023.03 - 2023.07, Winning AI Lab, Shanghai, China.

📚 Learning Materials

😁 If you want the following material without watermarks, please contact me using the email address and specify your intended use.

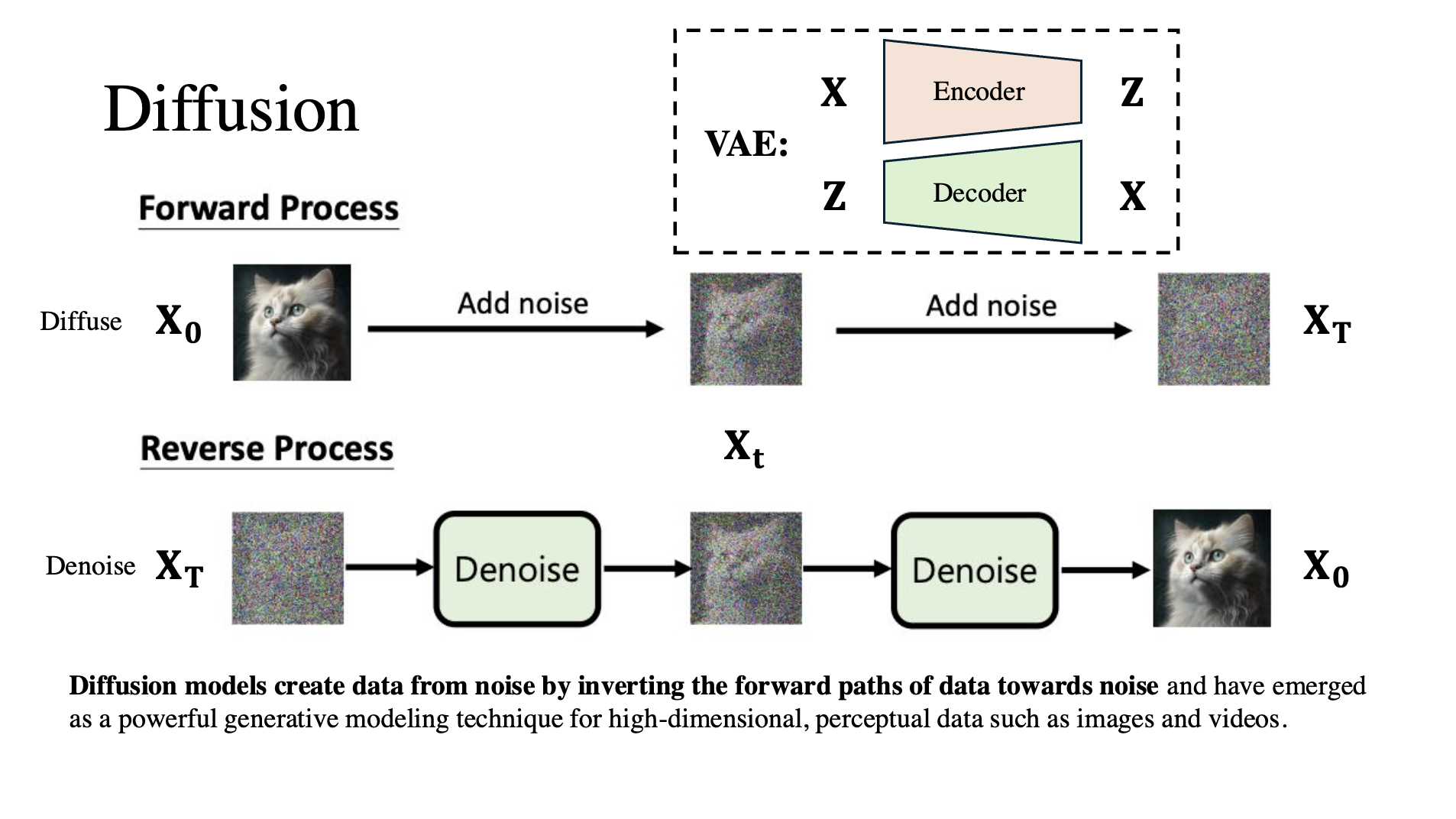

Material 1: Frontiers in Diffusion Model Technologies (1)

This document provides an overview of key concepts related to diffusion models, particularly focusing on the theoretical foundations, development timeline, and recent advancements in the field. The content includes detailed discussions on VAE, DDPM, DDIM, SDE, and ODE, as well as conditional guidance. It also covers the evolution of stable diffusion, including topics like Latent Diffusion, VQ-VAE, and DiT. Lastly, the document highlights the latest methodology, IC-Light, set to be presented at ICLR 2025.

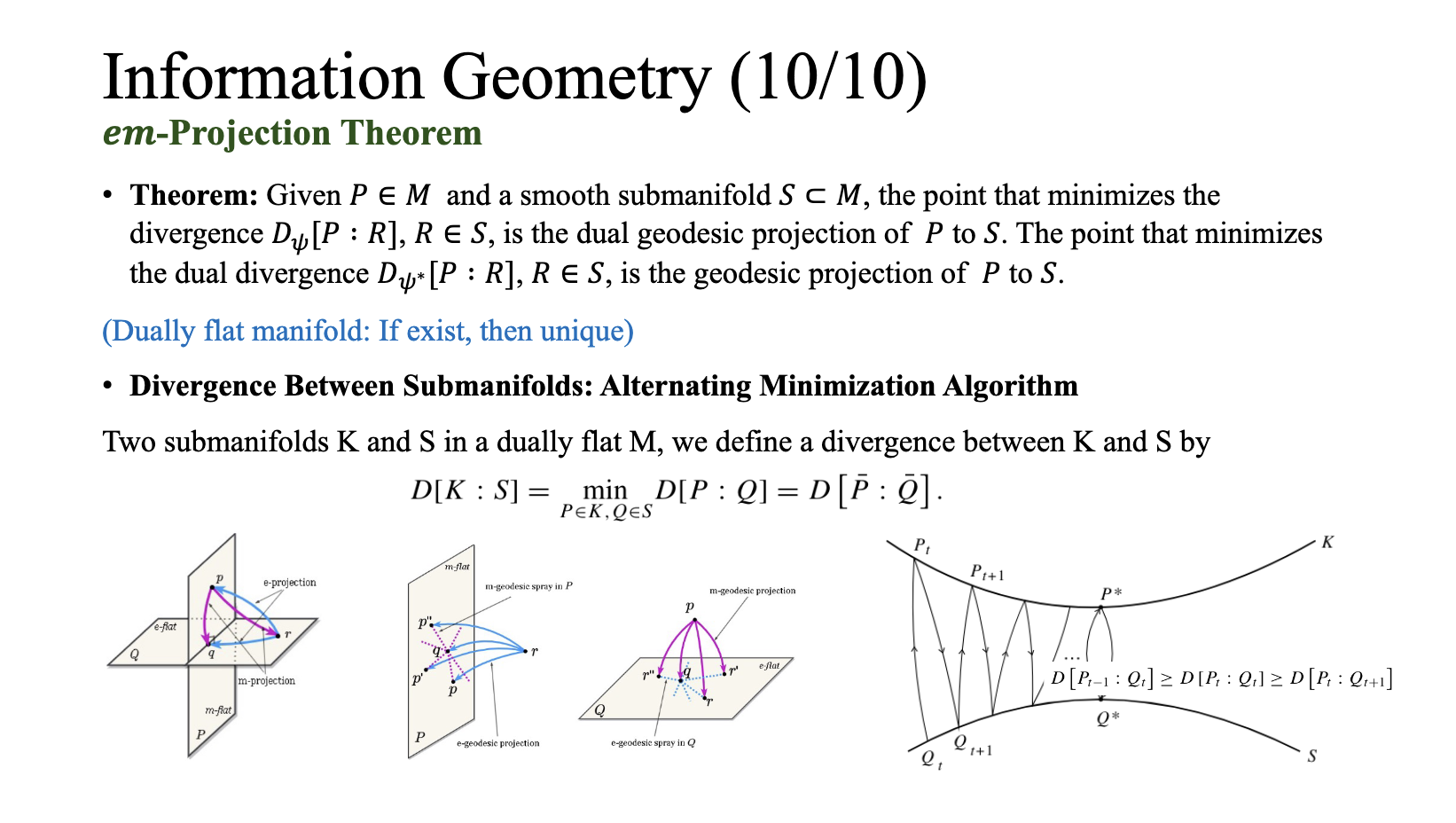

Material 2: Tutorial of Information Geometry and t3-VAE

This document introduces the t3-Variational Autoencoder (ICLR 2024), which uses Student’s t-distributions to model heavy-tailed data distributions and improve latent variable representations. It also explores the framework of Information Geometry, focusing on how generative models can be understood through statistical manifolds, divergences, and Riemannian metrics, providing a deeper understanding of probability distributions and their applications in machine learning, signal processing, and neuroscience.

Material 3: EM Algorithm and X-metric

This document introduces the X-metric framework (PAMI 2023), an N-dimensional information-theoretic approach designed for groupwise registration and deep combined computing, with applications in advanced machine learning tasks. It also covers the theoretical foundations, including entropy, mutual information, and the MLE algorithm, alongside the framework’s modifications for deep computing and network training.